作者留言

最近忙着机械臂的开发,更新不是很频繁,手眼标定和路径规划这两块从啥也不懂到有了一定的成果,付出很多心血,因此决定开一个新系列总结一下开发的心路历程。废话少说,先从深度相机开始:

准备工作

- 首先,安装 Python 3.10和虚拟环境。

- 安装 python 包:

pip3 install pyorbbecsdk-2.0.10-cp310-cp310-win_amd64.whl - 导航到 site-packages/pyorbbec/examples 目录并安装所需的依赖项:

pip3 install -r requirements.txt

获取色彩图

import cv2

from pyorbbecsdk import *

from utils import frame_to_bgr_image

ESC_KEY = 27

def main():

config = Config()

pipeline = Pipeline()

try:

profile_list = pipeline.get_stream_profile_list(OBSensorType.COLOR_SENSOR)

try:

color_profile: VideoStreamProfile = profile_list.get_video_stream_profile(640, 0, OBFormat.RGB, 30)

except OBError as e:

print(e)

color_profile = profile_list.get_default_video_stream_profile()

print("color profile: ", color_profile)

config.enable_stream(color_profile)

except Exception as e:

print(e)

return

pipeline.start(config)

while True:

try:

frames: FrameSet = pipeline.wait_for_frames(100)

if frames is None:

continue

color_frame = frames.get_color_frame()

if color_frame is None:

continue

# covert to RGB format

color_image = frame_to_bgr_image(color_frame)

if color_image is None:

print("failed to convert frame to image")

continue

cv2.imshow("Color Viewer", color_image)

key = cv2.waitKey(1)

if key == ord('q') or key == ESC_KEY:

break

except KeyboardInterrupt:

break

cv2.destroyAllWindows()

pipeline.stop()

if __name__ == "__main__":

main()获取深度图

import time

import cv2

import numpy as np

from pyorbbecsdk import *

ESC_KEY = 27

PRINT_INTERVAL = 1 # seconds

MIN_DEPTH = 20 # 20mm

MAX_DEPTH = 10000 # 10000mm

class TemporalFilter:

def __init__(self, alpha):

self.alpha = alpha

self.previous_frame = None

def process(self, frame):

if self.previous_frame is None:

result = frame

else:

result = cv2.addWeighted(frame, self.alpha, self.previous_frame, 1 - self.alpha, 0)

self.previous_frame = result

return result

def main():

config = Config()

pipeline = Pipeline()

temporal_filter = TemporalFilter(alpha=0.5)

try:

profile_list = pipeline.get_stream_profile_list(OBSensorType.DEPTH_SENSOR)

assert profile_list is not None

depth_profile = profile_list.get_default_video_stream_profile()

assert depth_profile is not None

print("depth profile: ", depth_profile)

config.enable_stream(depth_profile)

except Exception as e:

print(e)

return

pipeline.start(config)

last_print_time = time.time()

while True:

try:

frames = pipeline.wait_for_frames(100)

if frames is None:

continue

depth_frame = frames.get_depth_frame()

if depth_frame is None:

continue

depth_format = depth_frame.get_format()

if depth_format != OBFormat.Y16:

print("depth format is not Y16")

continue

width = depth_frame.get_width()

height = depth_frame.get_height()

scale = depth_frame.get_depth_scale()

depth_data = np.frombuffer(depth_frame.get_data(), dtype=np.uint16)

depth_data = depth_data.reshape((height, width))

depth_data = depth_data.astype(np.float32) * scale

depth_data = np.where((depth_data > MIN_DEPTH) & (depth_data < MAX_DEPTH), depth_data, 0)

depth_data = depth_data.astype(np.uint16)

# Apply temporal filtering

depth_data = temporal_filter.process(depth_data)

center_y = int(height / 2)

center_x = int(width / 2)

center_distance = depth_data[center_y, center_x]

current_time = time.time()

if current_time - last_print_time >= PRINT_INTERVAL:

print("center distance: ", center_distance)

last_print_time = current_time

depth_image = cv2.normalize(depth_data, None, 0, 255, cv2.NORM_MINMAX, dtype=cv2.CV_8U)

depth_image = cv2.applyColorMap(depth_image, cv2.COLORMAP_JET)

cv2.imshow("Depth Viewer", depth_image)

key = cv2.waitKey(1)

if key == ord('q') or key == ESC_KEY:

break

except KeyboardInterrupt:

break

cv2.destroyAllWindows()

pipeline.stop()

if __name__ == "__main__":

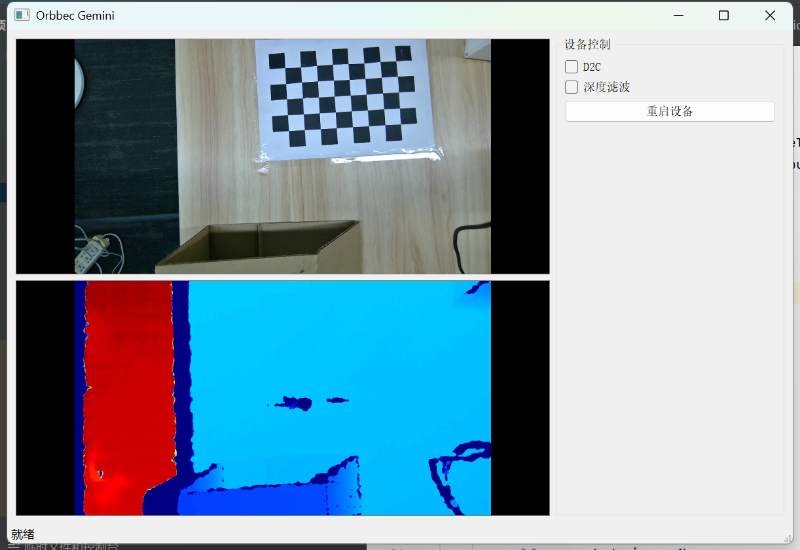

main()同时显示深度和色彩图

import sys

import cv2

import numpy as np

from PyQt5.QtWidgets import (

QApplication, QMainWindow, QWidget, QLabel, QVBoxLayout, QHBoxLayout,

QFormLayout, QPushButton, QCheckBox, QGroupBox

)

from PyQt5.QtCore import Qt, QTimer

from PyQt5.QtGui import QImage, QPixmap

from pyorbbecsdk import *

from utils import frame_to_bgr_image

MIN_DEPTH = 10

MAX_DEPTH = 1300

RGB_WIDTH, RGB_HEIGHT = 848, 480

DEPTH_WIDTH, DEPTH_HEIGHT = 848, 480

class OrbbecCameraApp(QMainWindow):

def __init__(self):

super().__init__()

self.pipeline = None

self.context = None

self.current_device = None

self.rgb_img = None

self.depth_mm = None

self.frames = None

self.init_ui()

self.init_camera()

self.setup_data_processing()

def init_ui(self):

self.setWindowTitle("Orbbec Gemini")

self.setGeometry(100, 100, 1200, 700)

main_widget = QWidget()

self.setCentralWidget(main_widget)

main_layout = QHBoxLayout(main_widget)

video_panel = QVBoxLayout()

self.rgb_display = self.create_video_label("RGB 视频流")

self.depth_display = self.create_video_label("深度图")

video_panel.addWidget(self.rgb_display)

video_panel.addWidget(self.depth_display)

control_panel = self.create_control_panel()

main_layout.addLayout(video_panel, 70)

main_layout.addWidget(control_panel, 30)

self.statusBar().showMessage("就绪")

def create_video_label(self, title):

label = QLabel(title)

label.setAlignment(Qt.AlignCenter)

label.setMinimumSize(640, 360)

label.setStyleSheet("border: 1px solid gray; background-color: black; color: white; font-size: 16px;")

return label

def create_control_panel(self):

panel = QGroupBox("设备控制")

layout = QFormLayout()

self.auto_D2D_cb = QCheckBox("D2C")

self.auto_D2D_cb.stateChanged.connect(self.toggle_D2D)

self.soft_filter_cb = QCheckBox("深度滤波")

self.soft_filter_cb.stateChanged.connect(self.toggle_soft_filter)

self.reboot_btn = QPushButton("重启设备", clicked=self.reboot_device)

layout.addRow(self.auto_D2D_cb)

layout.addRow(self.soft_filter_cb)

layout.addRow(self.reboot_btn)

panel.setLayout(layout)

return panel

def init_camera(self):

try:

self.context = Context()

devices = self.context.query_devices()

if devices.get_count() == 0:

self.show_status("未检测到设备")

return False

self.current_device = devices.get_device_by_index(0)

self.init_pipeline()

self.timer = QTimer(self)

self.timer.timeout.connect(self.process_frames)

self.timer.start(30)

return True

except OBError as e:

self.show_error(f"初始化失败: {str(e)}")

return False

def init_pipeline(self):

try:

self.pipeline = Pipeline()

config = Config()

color_profiles = self.pipeline.get_stream_profile_list(OBSensorType.COLOR_SENSOR)

self.color_profile = color_profiles.get_video_stream_profile(RGB_WIDTH, RGB_HEIGHT, OBFormat.RGB, 30)

config.enable_stream(self.color_profile)

depth_profiles = self.pipeline.get_stream_profile_list(OBSensorType.DEPTH_SENSOR)

self.depth_profile = depth_profiles.get_video_stream_profile(DEPTH_WIDTH, DEPTH_HEIGHT, OBFormat.Y16, 30)

config.enable_stream(self.depth_profile)

self.pipeline.enable_frame_sync()

self.pipeline.start(config)

return True

except OBError as e:

self.show_error(f"流配置错误: {str(e)}")

return False

def setup_data_processing(self):

self.depth_scale = 0.1

self.prev_depth = None

self.alpha = 0.5

def process_frames(self):

try:

self.frames = self.pipeline.wait_for_frames(100)

if not self.frames:

return

color_frame = self.frames.get_color_frame()

depth_frame = self.frames.get_depth_frame()

if color_frame:

self.rgb_img = frame_to_bgr_image(color_frame)

self.display_image(self.rgb_img, self.rgb_display)

if depth_frame:

h, w = depth_frame.get_height(), depth_frame.get_width()

self.depth_scale = depth_frame.get_depth_scale()

raw_depth = np.frombuffer(depth_frame.get_data(), dtype=np.uint16).reshape((h, w))

f_depth = raw_depth.astype(np.float32) * self.depth_scale

if self.prev_depth is not None:

filtered = cv2.addWeighted(f_depth, self.alpha, self.prev_depth, 1 - self.alpha, 0)

else:

filtered = f_depth

self.prev_depth = filtered.copy()

valid_depth = np.where((filtered > MIN_DEPTH) & (filtered < MAX_DEPTH), filtered, 0)

depth_vis = cv2.normalize(valid_depth, None, 0, 255, cv2.NORM_MINMAX, cv2.CV_8U)

depth_color = cv2.applyColorMap(depth_vis, cv2.COLORMAP_JET)

self.display_image(depth_color, self.depth_display)

self.depth_mm = filtered

except OBError as e:

self.show_error(f"帧获取失败: {str(e)}")

def toggle_D2D(self, state):

try:

self.current_device.set_bool_property(OBPropertyID.OB_PROP_SDK_DISPARITY_TO_DEPTH_BOOL, bool(state))

except OBError as e:

self.show_error(f"D2D失败: {str(e)}")

def toggle_soft_filter(self, state):

try:

self.current_device.set_bool_property(OBPropertyID.OB_PROP_DEPTH_SOFT_FILTER_BOOL, bool(state))

except OBError as e:

self.show_error(f"滤波设置失败: {str(e)}")

def reboot_device(self):

try:

self.current_device.reboot()

self.show_status("设备重启中...")

QTimer.singleShot(3000, self.reconnect_device)

except OBError as e:

self.show_error(f"重启失败: {str(e)}")

def reconnect_device(self):

try:

self.pipeline.stop()

self.init_camera()

self.show_status("设备已重新连接")

except Exception as e:

self.show_error(f"重连失败: {str(e)}")

def display_image(self, image, label):

if image is None:

return

if len(image.shape) == 2:

h, w = image.shape

q_img = QImage(image.data, w, h, w, QImage.Format_Grayscale8)

else:

h, w, ch = image.shape

rgb_img = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

q_img = QImage(rgb_img.data, w, h, ch * w, QImage.Format_RGB888)

scaled_img = q_img.scaled(label.width(), label.height(), Qt.KeepAspectRatio, Qt.SmoothTransformation)

label.setPixmap(QPixmap.fromImage(scaled_img))

def show_status(self, message):

self.statusBar().showMessage(message)

def show_error(self, message):

self.statusBar().showMessage(f"错误: {message}")

print(f"[ERROR] {message}")

def closeEvent(self, event):

if self.pipeline:

self.pipeline.stop()

event.accept()

if __name__ == "__main__":

app = QApplication(sys.argv)

window = OrbbecCameraApp()

window.show()

sys.exit(app.exec_())